How to index on Google faster for Free

Indexing tools have now become the trend in late 2023 and some can be expensive. Let me show you how you can mass index your pages for free using the Google Cloud web indexing API.

Yes, this will take 30 minutes instead of 15, but you'll save some money and likely learn some new things.

🚧 Heads Up

This doesn't requiring any programming knowledge but we will be using terminal & installing some packages via terminal. Don't be scared if you're not a programmer. You got this!

1. Create Google Cloud project

If you don't have one already, navigate to Google Cloud Console and create a new project. Give it a name and press create.

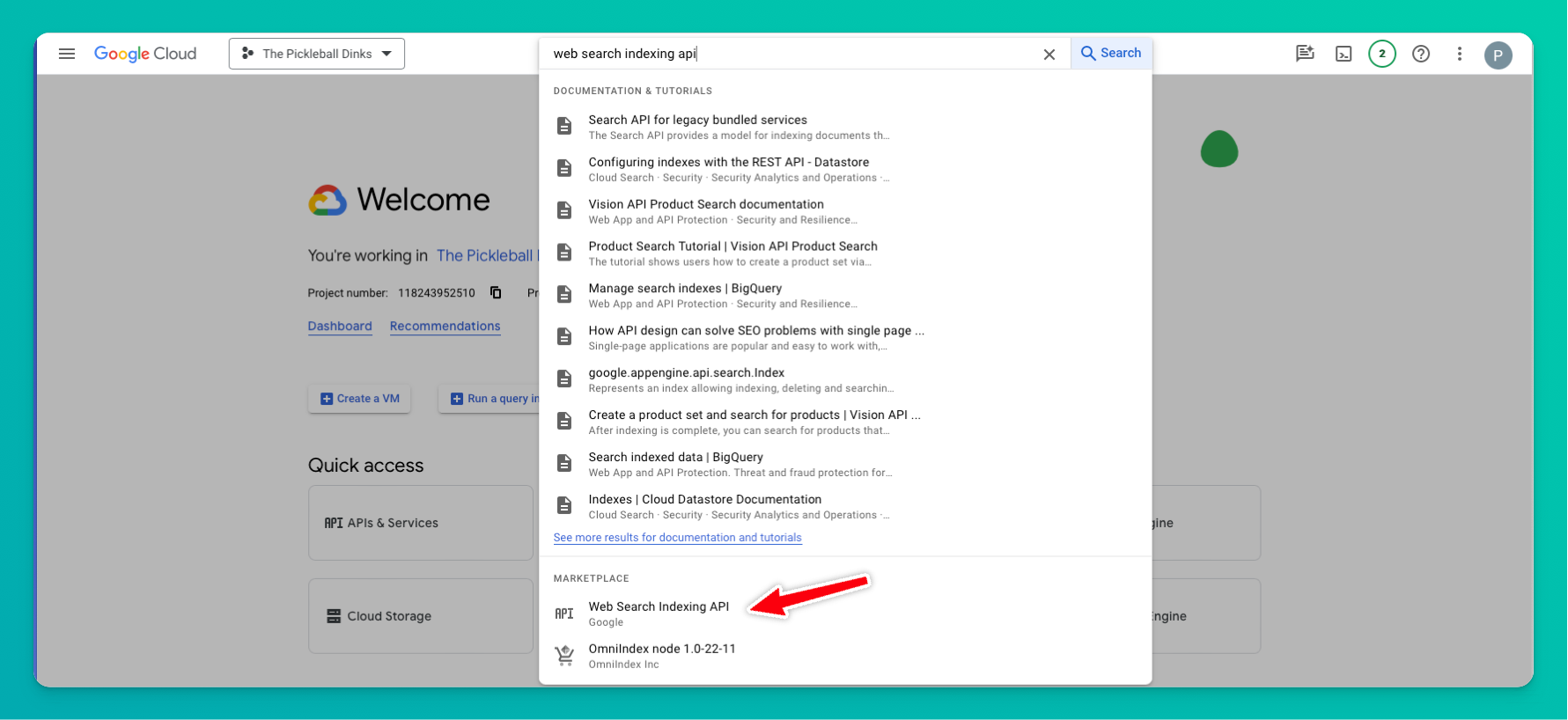

2. Enable Web Search Indexing API

From search, look for "web search indexing api"

Click to Enable. If you've already enabled, click Manage as we'll need to be in there for step 3.

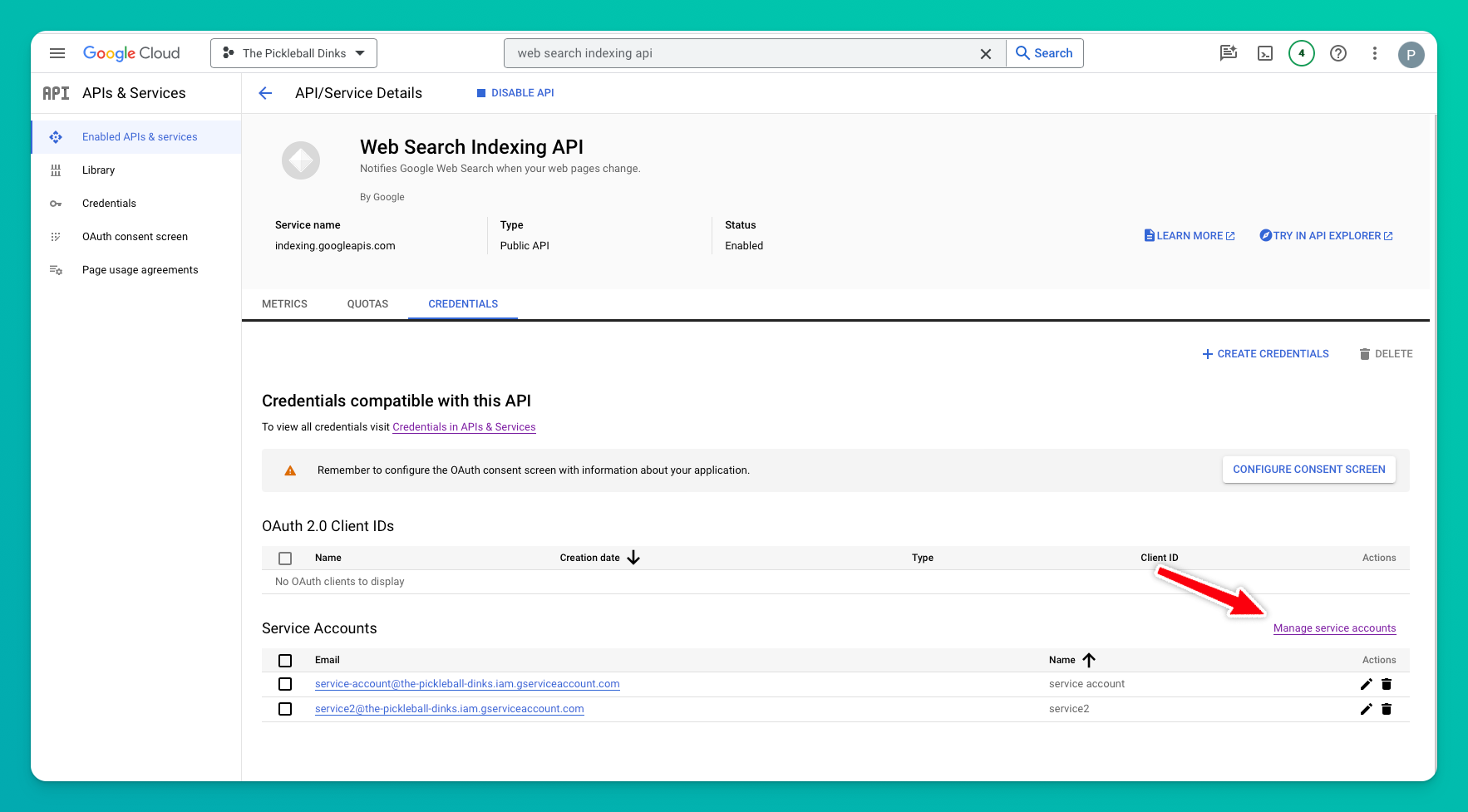

3. Create a service account

From the web search indexing api page, go to Enabled APIs & services -> Credentials -> Manage service accounts

Press + CREATE SERVICE ACCOUNT.

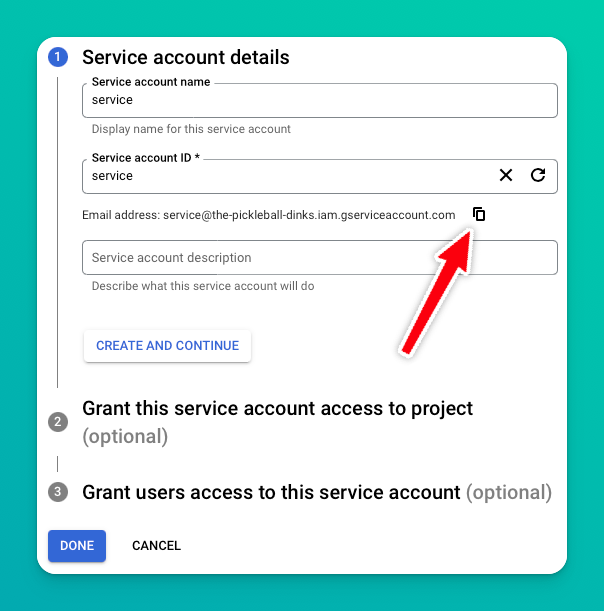

4. Provide service account details

You can enter anything for the service account name. Copy the email address and save it somewhere. We'll need it for Google Search Console in step 6.

Press Next. Provide a role. I typically provide Owner. Then press Done.

5. Download service account key

Once you've got your service account created, we need to download the key. Click into the service account. Go to Keys -> Add Key -> Create new key. Select JSON and create.

Our script in step 7 will need this.

6. Setup service account

Open Google Search Console. From your project -> Settings -> Users and permissions -> Add user.

Paste your service account email we copied earlier.

Now I noticed a weird issue earlier for me. For whatever reason, I had to save the user with the permission as Full, then go back and update the permission to Owner in order to get it to work. Setting it as Owner right away was giving me 429 issues. This may have been unrelated, but just what I noticed.

At any rate, make sure the user is setup as an Owner.

7. Create indexing python script

You have 2 options, here 1 catered for the programmer and one note catered to the programmer.

For programmers, git clone this repository - https://github.com/dinokukic/indx

For non-programmers or those who don't want to deal with git, follow this link and copy the contents - https://raw.githubusercontent.com/dinokukic/indx/main/indx.py.

Open up a text editor on your computer, paste the contents and save the file as indx.py in a specific folder.

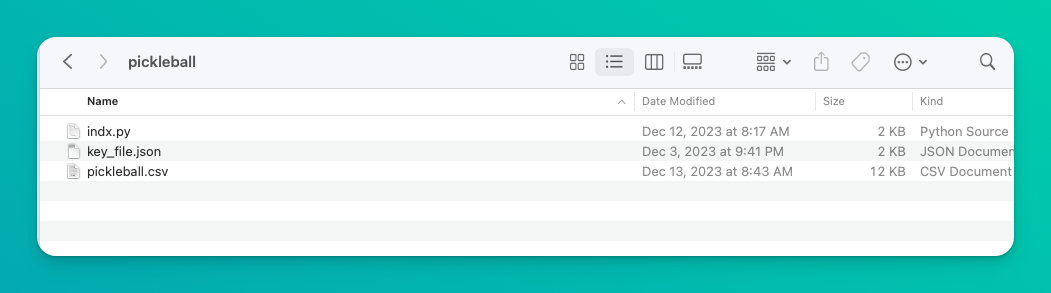

8. Copy key file

Move the key file into the same folder where your indx.py folder exists. Rename the file to key_file.json.

The reason we're renaming it is the python script is going to be looking for the file in that folder with that exact name.

9. Create your list of URLs

The script needs a list of urls to index. I used a tool to extract all my urls from my sitemap - Free XML Sitemap URL Extractor.

Copy the results and paste those into a .csv file. Note, don't provide a header. URL 1 you want to index should be the first line.

10. Install packages

Open terminal, navigate to your folder via the "cd" and "pwd" commands. Run the following command to install some packages:

pip install --upgrade google-api-python-client google-auth-httplib2 google-auth-oauthlib

11. Run your script

At this point your folder should look something like this:

Run the following script. Be sure to replace the csv filename with whatever you named yours.

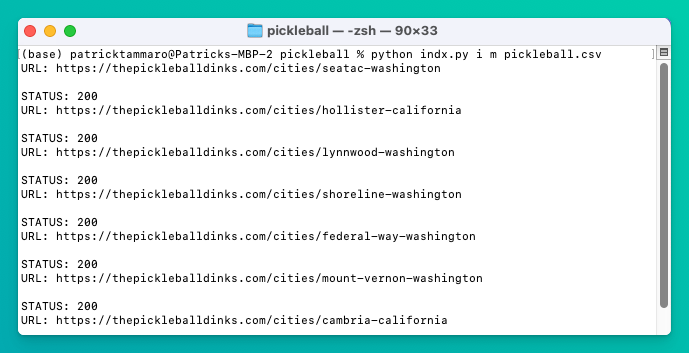

python indx.py i m pickleball.csv

If all goes well, you should see status 200 responses.

You can index up to 200 pages a day. Although I haven't tested it, apparently that's true per service account. If that's the case you can create more service accounts and index more than 200 in a day.

My Examples

I've gotten more into PSEO recently which is what sent me down this rabbit hole of indexing. You can manually request indexing via GSC, but that takes way too long and doesn't scale well.

I started seeing these indexing tools on the internet and was curious how they worked. Come to find out, some folks have built some free scripts to do the same thing!

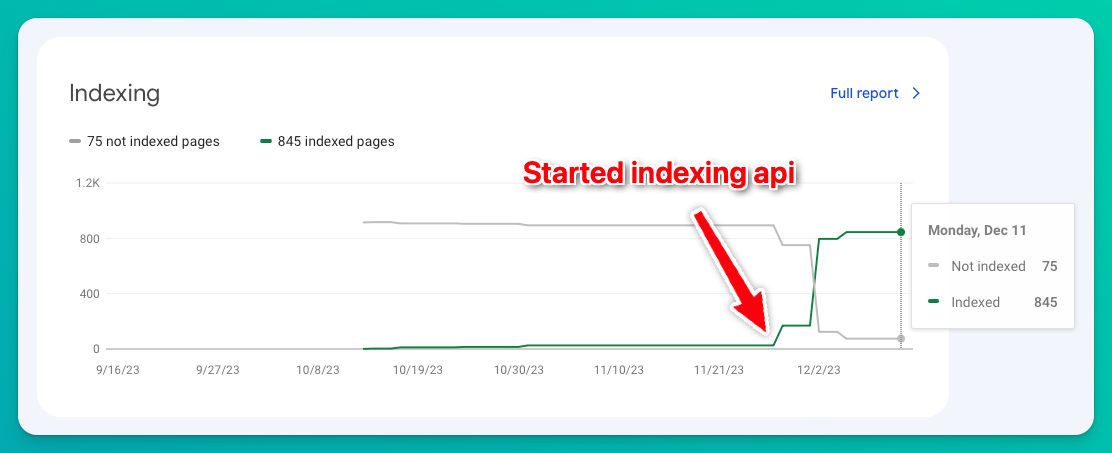

ThePickleballDinks

This website is my pickleball blog. I'm obsessed with pickleball. I write about it from time to time. This website also serves up a directory of places to play pickleball broken up by state and city. This indexing script is helping me get those pages indexed faster.

JustTheExercise

I came across a dataset of over 800 exercises - how to do them, primary muscles worked, equipment needed, some pictures.

I decided I wanted to try PSEO an was going to use this dataset as practice. Thanks to AI I got some unique lookin' photos too.

You can see this is a new site. It had been around for 1.5 months, had 800+ pages, and only 26 were indexed. How am I supposed to rank on Google? Once I started this strategy it almost overnight jumped up to 800+.